Overview

Google Cloud Functions are event based functions that enables the building and integrating of Google Cloud Services.

In this blog we are going to explore a scenario where the goal is to parse a GeoJson file, on receipt, into Google Cloud Storage (GCS) and create CSV files in GCS for subsequent processing.

We decided to use Python as the scripting language for this example, other options available included Node.js and Go. A couple of notes and lessons to learn include

The sample data for this blog is obtained from Google Earth. A blog on how to obtain it is available here

The code examples referred to in this blog can be located here

You should develop your script as far as possible in your preferred Python development environment, I used Visual Studio. This saves lots of time as using the editor provided within the GCP console is cumbersome and slow. Once you have a working version, then convert it, in this case, to read from GCS and save it in Google Cloud Function.

In line with best practice when writing Python code, don’t use a mixture of spaces and tabs unless you want to deal with issues.

You will need to choose your own bucket names, make sure you make the necessary changes in the code below.

Buckets

Before we start with creating the Cloud Function, we need two GCP buckets. The names I used are:

- lndng_bckt (bucket for landing the source file)

- prcssd_bckt (bucket in which the two output files are created)

Their purpose are to land the source file in (lndng_bckt) which then triggers the event to process the file and create two CSV files in the processed bucket (prcssd_bckt).

The sample file that will be processed is sourced from Google Earth. There are many examples available and it is a great source of GeoJson files. In this case we are using a file called GEvectors.geojson. Details on how to achieve this are available here

Function

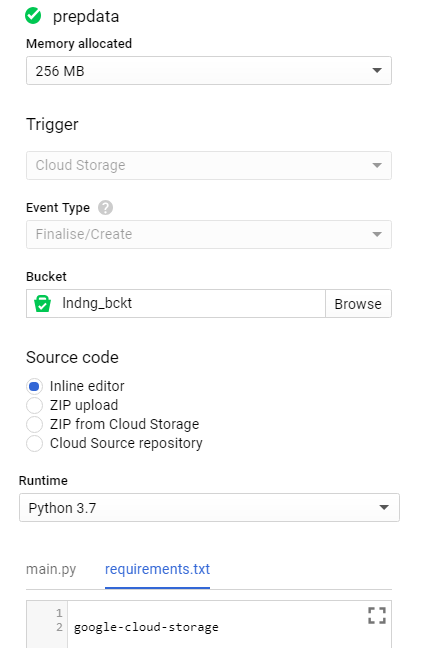

Now it is time to create the function. The following shows the options chosen:

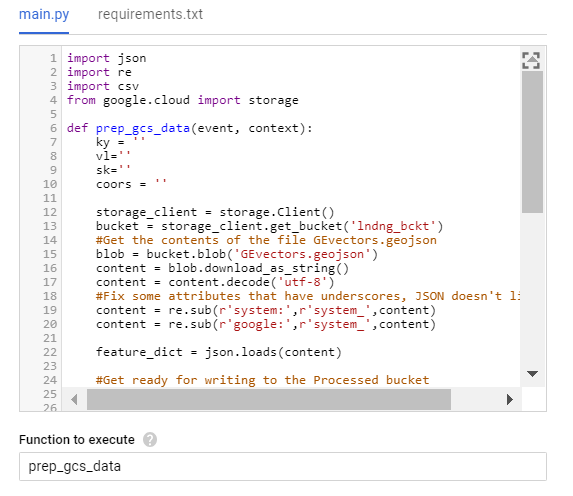

You’ll notice that we have also made an entry in the field labelled requirements.txt. When you deploy, Cloud Functions will download and install dependencies declared in the requirements.txt file using pip. As we are working with files in GCS, we have entered the dependency google-cloud-storage.The last step will be to add the Python code to process the GeoJson file on receipt. This is as follows:

Once you have added the code, you will need to make sure that you change the Function to Execute to be prep_gcs_data:

Test

Before we test you need to make sure you have:

- Created your buckets and changed the names in the Python code above to suit the names you chose

- Uploaded the file called ‘GEVector.GeoJson’ into the ‘landing’ bucket. The sample file can be sourced from here.

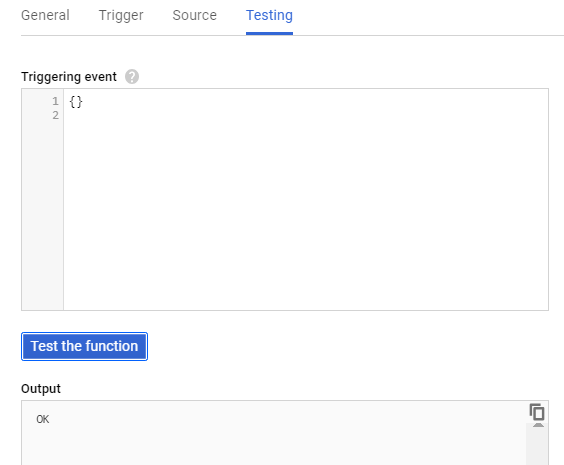

Testing can be done in two ways, the first is to simply select the Testing Tab and then Test The Function

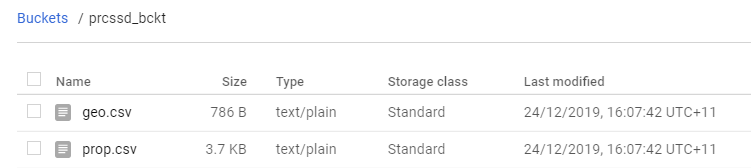

This will trigger the Cloud Function without needing a new file to arrive. The second way is to generate a new file as described in this blog. This will cause the event to fire and Cloud Function to be executed.In either case, you can now browse to your ‘processed’ bucket and, if you have followed all of the steps correctly, review the two new files:

Hopefully you have found this a useful example, whilst the GCP interface is a little clunky, the power and flexibility of Cloud Function clearly makes it a valuable part of any solution.